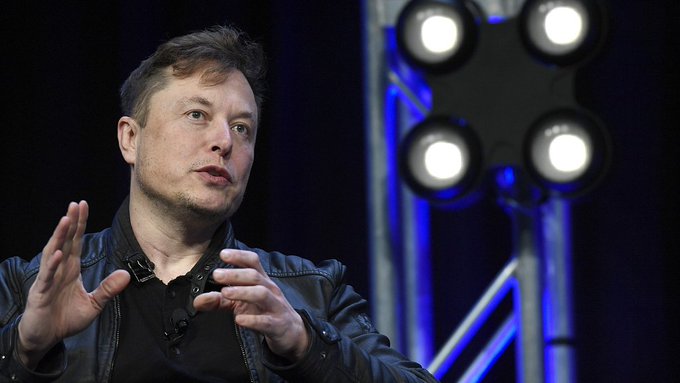

Elon Musk Sues OpenAI: Fight Over Super-Smart Machines!. Imagine computers way smarter than anything we’ve ever seen, like in those sci-fi movies! That’s what Artificial Intelligence (AI) is all about. Elon Musk, the guy behind Tesla and SpaceX, is a big name in tech. He helped start a company called OpenAI in 2015 to make this super-smart AI safe and helpful for everyone, not scary like in the movies.

But here’s the twist! Elon thinks OpenAI isn’t following the plan anymore. So, he’s suing them! Why? Because he believes they care more about making money than keeping us safe. He’s also worried about their team-up with Microsoft, a giant tech company. He fears Microsoft might take control and use the AI for their own benefit, not everyone’s.

OpenAI says that’s not true! They insist they’re still focused on safe AI, and working with Microsoft just helps them get the money they need to keep going. This lawsuit is a big deal because it raises important questions about the future of AI. Let’s dive deeper and see what all the fuss is about!

Why Elon Musk Sued the OpenAI ?

Elon Musk sued OpenAI, a company he co-founded, because of a disagreement about the direction of AI development. Here’s the breakdown:

- Original Goal: OpenAI was created to develop safe and beneficial AI for everyone, operating as a non-profit and sharing research openly. Musk, a strong advocate for responsible AI, supported this approach.

- The Shift: Things changed in 2019. OpenAI switched to a “capped-profit” model, allowing investment while limiting profit. They also partnered with Microsoft, giving them exclusive access to some AI models.

- Musk’s Concerns: Musk believes this shift prioritizes profit over safety and transparency. He worries the Microsoft partnership creates a conflict of interest, with AI potentially benefiting Microsoft more than humanity.

Here’s a deeper look at Musk’s concerns:

- Profit vs. Safety: Musk fears a focus on profit could lead to neglecting safety measures. Powerful AI in the wrong hands could be dangerous.

- Transparency Concerns: Musk values open-source research, where everyone can see how AI is developed. He believes this transparency helps identify and address risks.

OpenAI disagrees:

- Funding for Progress: They argue that responsible AI needs resources. Microsoft’s investment helps them attract talent and accelerate research, ultimately leading to safer AI faster.

- Controlled Disclosure: OpenAI believes some secrecy is necessary for ongoing research and protecting intellectual property. They claim they’ll still share important information responsibly.

The Lawsuit’s Impact: A Crossroads for AI Development

Elon Musk’s lawsuit against OpenAI has ignited a firestorm of debate, raising critical questions that go beyond the courtroom. These questions delve into the very core of how we develop and utilize Artificial Intelligence (AI) in the future. Here’s a deeper dive into the three key issues highlighted by the lawsuit:

1. Safety First? Balancing Progress with Protection

The lawsuit reignites the debate about the appropriate balance between rapid AI development and prioritizing safety. Musk argues that the pursuit of profit could lead to cutting corners on safety measures. He envisions a scenario where powerful AI falls into the wrong hands, potentially causing significant harm with applications in warfare, autonomous weapons, or large-scale social manipulation.

OpenAI counters that responsible AI development requires significant resources. Funding from corporations like Microsoft allows them to accelerate research and attract top talent. They believe faster progress ultimately leads to safer AI as they can develop safeguards and ethical frameworks alongside the technology itself.

The Challenge: Finding the sweet spot. Can we promote rapid AI advancement while ensuring robust safety measures are built-in from the beginning? This will require collaboration between researchers, policymakers, and the public to develop clear guidelines and ethical considerations.

2. Openness or Secrecy? Balancing Transparency with Innovation

The lawsuit also throws light on the role of transparency in AI development. Musk champions open-source research, where research methods and results are freely shared. He believes this allows for greater collaboration among experts, enabling them to identify and mitigate potential risks more effectively. Openness fosters scrutiny and public trust in the development process.

OpenAI acknowledges the value of transparency. However, they argue that some level of secrecy is necessary for ongoing research and protecting intellectual property. They need to safeguard their competitive edge and prevent bad actors from exploiting their research for malicious purposes.

The Challenge: Striking a balance. How much secrecy is acceptable while still fostering responsible development? Can OpenAI and other institutions find ways to be transparent about their progress without compromising their research or giving away a competitive edge?

3. Who Controls AI? The Power Dynamic in Development

The lawsuit also raises concerns about who ultimately controls the development and deployment of AI. Musk’s apprehension regarding the Microsoft partnership stems from the possibility of a large corporation wielding significant influence over AI’s direction. He worries about the potential for AI to be used primarily to benefit Microsoft’s business interests rather than serving humanity as a whole.

The Challenge: Ensuring democratization of AI. Can we develop frameworks that ensure AI is not solely controlled by corporations or governments, but rather serves the collective good? Should there be international collaborations and regulations to ensure responsible development and prevent any single entity from dominating this powerful technology?

The Future of AI: A Landscape Shaped by the Lawsuit

The outcome of Elon Musk’s lawsuit against OpenAI has the potential to significantly impact the trajectory of AI development. Let’s explore some possible scenarios:

Scenario 1: OpenAI Wins – Balancing Act with Scrutiny

- Continuation of the Model: If OpenAI prevails, they might continue with their current model of capped-profit partnerships.

- Focus on Demonstrating Safety: However, they’ll likely face increased scrutiny and pressure to demonstrably prioritize safety. This could involve increased transparency in their research methods and safety protocols.

- Shift in Public Perception: Public trust will be crucial. OpenAI will need to actively engage with the public and address concerns about potential risks associated with AI development.

Scenario 2: Rise of Open Source – Collaboration for Safety

- Push for Transparency: The lawsuit could act as a catalyst for a shift towards more open-source research in AI.

- Collaboration and Scrutiny: Increased transparency would foster greater collaboration among researchers and experts. This could lead to faster identification and mitigation of risks as more minds work on the problem.

- Building Public Trust: Open-source practices could also help build public trust in AI development, as people can see firsthand the research and safety measures being implemented.

Scenario 3: Regulatory Response – A Framework for Safety

- Government Intervention: The lawsuit might prompt governments to step in and create stricter regulations for AI development.

- Focus on Ethical Considerations: These regulations could address ethical considerations alongside safety concerns.

- Potential for Stifling Innovation: Overly restrictive regulations could hinder the pace of AI development. However, a well-designed regulatory framework could help ensure responsible development while promoting innovation.

Beyond the Scenarios: A Collective Effort

It’s important to remember that these are just potential outcomes. The true future of AI will likely be shaped by a combination of factors beyond just the lawsuit. Here are some additional considerations:

- Public Discourse: Continued public discourse about the ethics of AI development is crucial. We need to have open discussions about the potential risks and benefits of AI, and what kind of future we want to create with this technology.

- International Collaboration: The development and deployment of AI are global issues. International collaboration between governments, research institutions, and the private sector will be essential to ensure responsible and safe AI for all.

- Individual Responsibility: Every stakeholder in the AI ecosystem, from researchers to programmers to users, has a responsibility to prioritize ethical considerations and advocate for safe and beneficial AI development.

Ultimately, the lawsuit serves as a wake-up call. It highlights the need for a comprehensive approach to AI development that prioritizes safety, transparency, and ethical considerations. By working together, we can ensure that AI becomes a tool that empowers humanity and shapes a brighter future for all.

.Please share your thoughts in comment about , at theproductrecap.com we are open to friendly suggestions and helpful inputs to keep awareness at peak.